2025-06-30 22:00:00

Newly discovered genes could make powerful drug, Taxol, cheaper and more sustainable to produce.

Stroll through ancient churchyards in England, and you’ll likely see yew trees with bright green leaves and stunning ruby red fruits guarding the graves. These coniferous trees are known in European folklore as a symbol of death and doom.

They’re anything but. The Pacific yew naturally synthesizes paclitaxel—commonly known as Taxol, a chemotherapy drug widely used to fight multiple types of aggressive cancer. In the late 1990s, it was FDA-approved for breast, ovarian, and lung cancer and, since then, has been used off-label for roughly a dozen other malignancies. It’s a modern success story showing how we can translate plant biology into therapeutic drugs.

But because Taxol is produced in the tree’s bark, harvesting the life-saving chemical kills its host. Yew trees are slow-growing with very long lives, making them an unsustainable resource. If scientists can unravel the genetic recipe for Taxol, they can recreate the steps in other plants—or even in yeast or bacteria—to synthesize the molecule at scale without harming the trees.

A new study in Nature takes us closer to that goal. Taxol is made from a precursor chemical, called baccatin III, which is just a few chemical steps removed from the final product and is produced in yew needles. After analyzing thousands of yew tree cells, the team mapped a 17-gene pathway leading to the production of baccatin III.

They added these genes to tobacco plants—which don’t naturally produce baccatin III—and found the plants readily pumped out the chemical at similar levels to yew tree needles.

The results are “a breakthrough in our understanding of the genes responsible for the biological production of this drug,” wrote Jakob Franke at Leibniz University Hannover, who was not involved in the study. “The findings are a major leap forward in efforts to secure a reliable supply of paclitaxel.”

Humans have long used plants as therapeutic drugs.

More than 3,500 years ago, Egyptians found that willow bark can lower fevers and reduce pain. We’ve since boosted its efficacy, but the main component is now sold in every drugstore—Aspirin. Germany has approved a molecule from lavender flowers for anxiety disorders, and some compounds from licorice root may help protect the liver, according to early clinical trials.

The yew tree first caught scientists’ attention in the late 1960s, when they were screening a host of plant extracts for potential anticancer drugs. Most were duds or too toxic. Taxol stood out for its unique effects against tumors. The molecule blocks cancers from building a “skeleton-like” structure in new cells and kneecaps their ability to grow.

Taxol was a blockbuster success but the medical community was concerned natural yew trees couldn’t meet clinical demand. Scientists soon began trying to artificially synthesize the drug. The discovery of baccatin III, which can be turned into Taxol after some chemical tinkering, was a game-changer in their quest. This Taxol precursor occurs in much larger quantities in the needles of various yew species that can be harvested without killing the trees. But the process requires multiple chemical steps and is highly costly.

Making either baccatin III or Taxol from scratch using synthetic biology—that is, transferring the necessary genes into other plants or microorganisms—would be a more efficient alternative and could boost production at an industrial scale. For the idea to work, however, scientists would need to trace the entire pathway of genes involved in the chemicals’ production.

Two teams recently sorted through yew trees’ nearly 50,000 genes and discovered a minimal set of genes needed to make baccatin III. While this was a “breakthrough” achievement, wrote Franke, adding the genes to nicotine plants yielded very low amounts of the chemical.

Unlike bacterial genomes, where genes that work together are often located near one another, related genes in plants are often sprinkled throughout the genome. This confetti-like organization makes it easy to miss critical genes involved in the production of chemicals.

The new study employed a simple but “highly innovative strategy,” Frank wrote.

Yew plants produce more baccatin III as a defense mechanism when under attack. By stressing yew needles out, the team reasoned, they could identify which genes activated at the same time. Scientists already know several genes involved in baccatin III production, so these ingredients could be used to fish out genes currently missing from the recipe.

The team dunked freshly clipped yew needles into plates lined with wells containing water and fertilizer—picture mini succulent trays. To these, they added stressors such as salts, hormones, and bacteria to spur baccatin III production. The setup simultaneously screened hundreds of combinations of stressors.

The team then sequenced mRNA—a proxy for gene expression—from more than 17,000 single cells to track which genes were activated together and under what conditions.

The team found eight new genes involved in Taxol synthesis. One, dubbed FoTO1, was especially critical for boosting the yield of multiple essential precursors, including baccatin III. The gene has “never before been implicated in such biochemical pathways, and which would have been almost impossible to find by conventional approaches,” wrote Franke.

They spliced 17 genes essential to baccatin III production into tobacco plants, a species commonly used to study plant genetics. The upgraded tobacco produced the molecule at similar—or sometimes even higher—levels compared to yew tree needles.

Although the work is an important step, relying on tobacco plants has its own problems. The added genes can’t be passed down to offspring, meaning every generation has to be engineered. This makes the technology hard to scale up. Alternatively, scientists might use microbes instead, which are easy to grow at scale and already used to make pharmaceuticals.

“Theoretically, with a little more tinkering, we could really make a lot of this and no longer need the yew at all to get baccatin,” said study author Conor McClune in a press release.

The end goal, however, is to produce Taxol from beginning to end. Although the team mapped the entire pathway for baccatin III synthesis—and discovered one gene that converts it to Taxol—the recipe is still missing two critical enzymes.

Surprisingly, a separate group at the University of Copenhagen nailed down genes encoding those enzymes this April. Piecing the two studies together makes it theoretically possible to synthesize Taxol from scratch, which McClune and colleagues are ready to try.

“Taxol has been the holy grail of biosynthesis in the plant natural products world,” said study author Elizabeth Sattely.

The team’s approach could also benefit other scientists eager to explore a universe of potential new medicines in plants. Chinese, Indian, and indigenous cultures in the Americas have long relied on plants as a source of healing. Modern technologies are now beginning to unravel why.

The post Scientists Genetically Engineer Tobacco Plants to Pump Out a Popular Cancer Drug appeared first on SingularityHub.

2025-06-28 22:00:00

The wheel changed the course of history for all of humanity. But its invention is shrouded in mystery.

Imagine you’re a copper miner in southeastern Europe in the year 3900 BCE. Day after day, you haul copper ore through the mine’s sweltering tunnels.

You’ve resigned yourself to the grueling monotony of mining life. Then one afternoon, you witness a fellow worker doing something remarkable.

With an odd-looking contraption, he casually transports the equivalent of three times his body weight on a single trip. As he returns to the mine to fetch another load, it suddenly dawns on you that your chosen profession is about to get far less taxing and much more lucrative.

What you don’t realize: You’re witnessing something that will change the course of history—not just for your tiny mining community, but for all of humanity.

Despite the wheel’s immeasurable impact, no one is certain as to who invented it, or when and where it was first conceived. The hypothetical scenario described above is based on a 2015 theory that miners in the Carpathian Mountains—in present-day Hungary—first invented the wheel nearly 6,000 years ago as a means to transport copper ore.

The theory is supported by the discovery of more than 150 miniaturized wagons by archaeologists working in the region. These pint-sized, four-wheeled models were made from clay, and their outer surfaces were engraved with a wickerwork pattern reminiscent of the basketry used by mining communities at the time. Carbon dating later revealed that these wagons are the earliest known depictions of wheeled transport to date.

This theory also raises a question of particular interest to me, an aerospace engineer who studies the science of engineering design. How did an obscure, scientifically naive mining society discover the wheel, when highly advanced civilizations, such as the ancient Egyptians, did not?

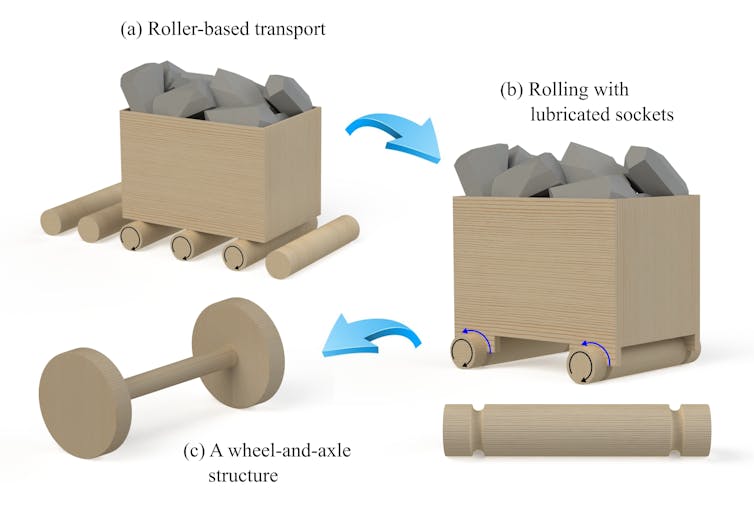

It has long been assumed that wheels evolved from simple wooden rollers. But until recently no one could explain how or why this transformation took place. What’s more, beginning in the 1960s, some researchers started to express strong doubts about the roller-to-wheel theory.

After all, for rollers to be useful, they require flat, firm terrain and a path free of inclines and sharp curves. Furthermore, once the cart passes them, used rollers need to be continually brought around to the front of the line to keep the cargo moving. For all these reasons, the ancient world used rollers sparingly. According to the skeptics, rollers were too rare and too impractical to have been the starting point for the evolution of the wheel.

But a mine—with its enclosed, human-made passageways—would have provided favorable conditions for rollers. This factor, among others, compelled my team to revisit the roller hypothesis.

The transition from rollers to wheels requires two key innovations. The first is a modification of the cart that carries the cargo. The cart’s base must be outfitted with semicircular sockets, which hold the rollers in place. This way, as the operator pulls the cart, the rollers are pulled along with it.

This innovation may have been motivated by the confined nature of the mine environment, where having to periodically carry used rollers back around to the front of the cart would have been especially onerous.

The discovery of socketed rollers represented a turning point in the evolution of the wheel and paved the way for the second and most important innovation. This next step involved a change to the rollers themselves. To understand how and why this change occurred, we turned to physics and computer-aided engineering.

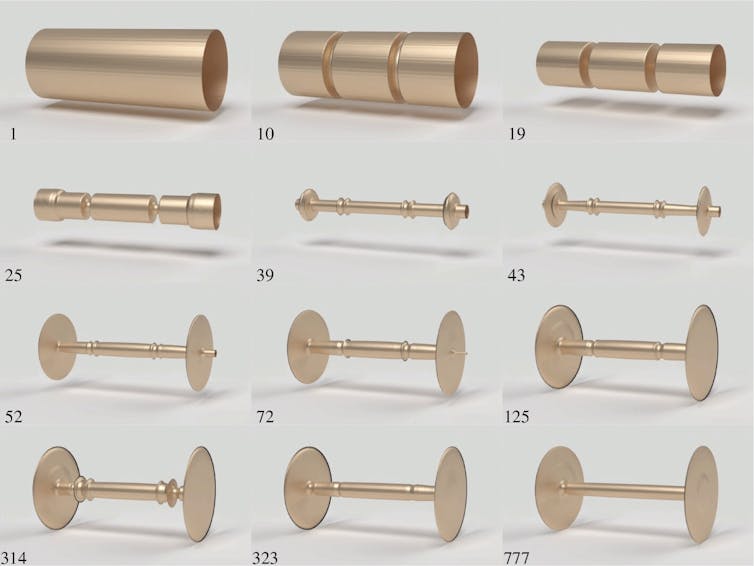

To begin our investigation, we created a computer program designed to simulate the evolution from a roller to a wheel. Our hypothesis was that this transformation was driven by a phenomenon called “mechanical advantage.” This same principle allows pliers to amplify a user’s grip strength by providing added leverage. Similarly, if we could modify the shape of the roller to generate mechanical advantage, this would amplify the user’s pushing force, making it easier to advance the cart.

Our algorithm worked by modeling hundreds of potential roller shapes and evaluating how each one performed, both in terms of mechanical advantage and structural strength. The latter was used to determine whether a given roller would break under the weight of the cargo. As predicted, the algorithm ultimately converged upon the familiar wheel-and-axle shape, which it determined to be optimal.

During the execution of the algorithm, each new design performed slightly better than its predecessor. We believe a similar evolutionary process played out with the miners 6,000 years ago.

It is unclear what initially prompted the miners to explore alternative roller shapes. One possibility is that friction at the roller-socket interface caused the surrounding wood to wear away, leading to a slight narrowing of the roller at the point of contact. Another theory is that the miners began thinning out the rollers so that their carts could pass over small obstructions on the ground.

Either way, thanks to mechanical advantage, this narrowing of the axle region made the carts easier to push. As time passed, better-performing designs were repeatedly favored over the others, and new rollers were crafted to mimic these top performers.

Consequently, the rollers became more and more narrow, until all that remained was a slender bar capped on both ends by large discs. This rudimentary structure marks the birth of what we now refer to as “the wheel.”

According to our theory, there was no precise moment at which the wheel was invented. Rather, just like the evolution of species, the wheel emerged gradually from an accumulation of small improvements.

This is just one of the many chapters in the wheel’s long and ongoing evolution. More than 5,000 years after the contributions of the Carpathian miners, a Parisian bicycle mechanic invented radial ball bearings, which once again revolutionized wheeled transportation.

Ironically, ball bearings are conceptually identical to rollers, the wheel’s evolutionary precursor. Ball bearings form a ring around the axle, creating a rolling interface between the axle and the wheel hub, thereby circumventing friction. With this innovation, the evolution of the wheel came full circle.

This example also shows how the wheel’s evolution, much like its iconic shape, traces a circuitous path—one with no clear beginning, no end, and countless quiet revolutions along the way.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

The post How Was the Wheel Invented? Computer Simulations Reveal Its Unlikely Birth Nearly 6,000 Years Ago appeared first on SingularityHub.

2025-06-27 22:00:00

New molecular shuttles can carry antibodies and enzymes to treat cancer and other brain diseases.

Our brain is a fortress. Its delicate interior is completely surrounded by a protective wall called the blood-brain barrier.

True to its name, the barrier separates contents in the blood and the brain. It keeps bacteria and other pathogens in the blood away from delicate brain tissue, while allowing oxygen and some nutrients to flow through.

The protection comes at a cost: Some medications, made up of large molecules, can’t enter the brain. These include antibodies that block the formation of protein clumps in Alzheimer’s disease, immunotherapies that destroy deadly brain tumors, and missing enzymes that could rescue inherited developmental diseases.

For decades, scientists have tried to smuggle these larger drugs into the brain without damaging the barrier. Now, thanks to a new crop of molecular shuttles, they’re on the brink of success. A top contender is based on transferrin, a protein that supplies iron to the brain—an element needed for myriad critical chemical reactions to ensure healthy brain function.

Although still in its infancy, the shuttle has already changed lives. One example is Hunter syndrome, a rare and incurable genetic disease in which brain cells lack a crucial enzyme. Kids with the syndrome begin losing their language, hearing, and movement as young toddlers. In severe cases, their lives are tragically cut short at 10 to 20 years of age.

Early results in clinical trials using the transferrin shuttle are showing promise. After delivering the missing enzyme into the brains of kids and adults with Hunter syndrome with a shot into a vein, the patients gradually regained their ability to speak, walk, and run around. Without the shuttle, the enzyme is too big to pass through the blood-brain barrier.

If the shuttle passes further safety testing and can be adapted for different cargo, it could potentially ferry a wide range of large biotherapeutics—including gene therapies—directly into the brain with a simple jab in the arm. From cancer to neurodegenerative disorders and other common brain diseases such as stroke, it would open a new world of therapeutic possibilities.

We often talk about the body and the brain as separate entities. In a way, they are. The blood-brain barrier, a tightly woven sheet of cells that lines vessels throughout the brain, regulates which molecules can enter. The cells are built like a brick wall—the molecules holding them together are literally called “tight junctions.”

But they’re not impenetrable. Small molecules, such as oxygen and caffeine can drift past the barrier, giving us that morning hit of energy with a good cup of coffee. Once inside the brain, these molecules can easily spread throughout the organ to feed energy-hungry tissues. Other molecules, such as glucose (sugar) or iron, require special protein “transporters” dotted along the surfaces of the barrier cells to enter.

Transporters are very specific about their cargo and can usually only grab onto one type of molecule. Once loaded up, the proteins pull the molecule into the interior of barrier cells by forming a fatty bubble around it, like a spaceship. The ship drifts across the barrier—with cargo protected inside—and releases its contents into the brain. In other words, it’s possible to temporarily open the barrier and transport larger molecules from the blood to the brain.

Some clever ideas are already being tested.

One of these is inspired by viruses that naturally infect the brain, such as HIV. After examining HIV’s protein sequence, scientists found a short—and safe—section called TAT that helps the virus tunnel through the barrier. Using protein sequencing, they can then physically tag small peptides (just a dozen or so protein “letters” long) to the TAT shuttle. Clinical trials are already underway using the system to reduce damage from stroke with just an injection. But the tiny carrier struggles with larger proteins such as antibodies or enzymes.

More recently, scientists took a hint from the transport mechanisms already embedded in the barrier—that is, why and how it allows some larger proteins in. The idea came from trials for Alzheimer’s disease. Breaking up the protein clumps characteristic of the disease with antibodies has shown promise in slowing symptoms, but they’re hard to deliver into the brain with an intravenous shot.

In most cases, roughly 0.1 percent of the treatment actually penetrates the brain, meaning that much higher doses are needed, adding expense and increasing the risk of side effects. The antibodies also crowd around blood vessels inside the brain rather than moving deeper.

One transporter, in particular, caught scientists’ eyes: transferrin. This large protein—picture a four-leaf clover—captures iron in the blood and then attaches itself to the barrier. Transferrin’s “stem” acts like a beacon, telling the barrier the cargo is safe to be shuttled into the brain. Barrier cells encapsulate transferrin for the voyage across and release it on the other side.

Rather than trying to engineer the entire protein, scientists synthesized only its stem—the most important part—which can then be connected to almost any large cargo. Multiple studies have found that the shuttle is relatively safe and doesn’t jeopardize normal iron processing in the brain. Cargos retained their function after the journey and once inside the brain.

Transferrin-based shuttles are being investigated for a wide range of brain disorders.

In Hunter syndrome, a shuttle carrying a missing enzyme has shown early success. The therapy is effective, in part, because the shuttles end up inside a cell’s waste factories, or lysosomes. These acid-filled pouches are natural parking spots for the shuttle and its cargo—they’re also where the enzyme needs to go, making the condition a perfect use case.

Scientists are also eyeing other brain disorders such as Alzheimer’s disease, in which toxic clumps of a protein called amyloid beta gradually build up inside the brain. A shuttle could increase the number of therapeutic antibodies accessing the brain, making the therapy more efficient. Other teams are testing the method as way to carry cancer-destroying antibodies that target brain cancer stemming from metastasized breast cancer.

It’s still early days for these brain shuttles, but efforts are underway to engineer other blood-brain barrier transporters into carriers too. These have different properties compared to transferrin-based ones. Some release their cargo more slowly, for example, making them potentially useful for slow-release drugs with longer therapeutic effects.

Shuttles that can carry gene therapies or gene editors could also change how we treat inherited neurological diseases. Transferrin-based shuttles have already carried antisense oligonucleotides—molecules that block gene function—into the brains of mice and macaque monkeys and delivered functional CRISPR components into mice.

With increasingly powerful AI models that can predict and dream up protein sequences, researchers could further develop more efficient protein shuttles based on natural ones—massively expanding what’s possible for treating brain diseases.

The post Scientists Are Smuggling Large Drugs Into the Brain—Opening a New World of Possible Therapies appeared first on SingularityHub.

2025-06-26 22:00:00

The number of scientific papers relying on AI has quadrupled, and the scope of problems AI can tackle expands by the day.

Modern artificial intelligence is a product of decades of painstaking scientific research. Now, it’s starting to pay that effort back by accelerating progress across academia.

Ever since the emergence of AI as a field of study, researchers have dreamed of creating tools smart enough to accelerate humanity’s endless drive to acquire new knowledge. With the advent of deep learning in the 2010s, this goal finally became a realistic possibility.

Between 2012 and 2022, the proportion of scientific papers that have relied on AI in some way has quadrupled to almost 9 percent. Researchers are using neural networks to analyze data, conduct literature reviews, or model complex processes across every scientific discipline. And as the technology advances, the scope of problems they can tackle is expanding by the day.

The poster boy for AI’s use in science is undoubtedly Google DeepMind’s Alphafold, whose inventors won the 2024 Nobel Prize in Chemistry. The model used advances in transformers—the architecture that powers large language models—to solve the “protein folding problem” that had bedeviled scientists for decades.

A protein’s structure determines its function, but previously the only way to discover its shape was with complex imaging techniques like X-ray crystallography and cryo-electron microscopy. Alphafold, in comparison, could predict the shape of a protein from nothing more than the series of amino acids making it up, something computer scientists had been trying and failing to do for years.

This made it possible to predict the shape of every protein known to science in just two years, a feat that could have transformative impact on biomedical research. Alphafold 3, released in 2024, goes even further. It can predict both the structure and interactions of proteins, as well as DNA, RNA, and other biomolecules.

Google has also turned its AI loose on another area of the life sciences, working with Harvard researchers to create the most detailed map of human brain connections to date. The team took ultra-thin slices from a 1-millimeter cube of human brain and used AI-based imaging technology to map the roughly 50,000 cells and 150 million synaptic connections within.

This is by far the most detailed “connectome” of the human brain produced to date, and the data is now freely available, providing scientists a vital tool for exploring neuronal architecture and connectivity. This could boost our understanding of neurological disorders and potentially provide insights into core cognitive processes like learning and memory.

AI is also revolutionizing the field of materials science. In 2023, Google DeepMind released a graph neural network called GnoME that predicted 2.2 million novel inorganic crystal structures, including 380,000 stable ones that could potentially form the basis of new technologies.

Not to be outdone, other big AI developers have also jumped into this space. Last year, Meta released and open sourced its own transformer-based materials discovery models and, crucially, a dataset with more than 110 million materials simulations that it used to train them, which should allow other researchers to build their own materials science AI models.

Earlier this year Microsoft released MatterGen, which uses a diffusion model—the same architectures used in many image and video generation models—to produce novel inorganic crystals. After fine-tuning, they showed it could be prompted to produce materials with specific chemical, mechanical, electronic, and magnetic properties.

One of AI’s biggest strengths is its ability to model systems far too complex for conventional computational techniques. This makes it a natural fit for weather forecasting and climate modeling, which currently rely on enormous physical simulations running on supercomputers.

Google DeepMind’s GraphCast model was the first to show the promise of the approach, which used graph neural networks to generate 10-day forecasts in one minute and at higher accuracy than existing gold standard approaches that would take several hours.

AI forecasting is so effective that it has already been deployed by the European Center for Medium-Range Weather Forecasts, whose Artificial Intelligence Forecasting System went live earlier this year. The model is faster, 1,000 times more energy efficient, and has boosted accuracy 20 percent.

Microsoft has created what it calls a “foundation model for the Earth system” named Aurora that was trained on more than a million hours of geophysical data. It outperforms existing approaches at predicting air quality, ocean waves, and the paths of tropical cyclones while using orders of magnitude less computation.

AI is also contributing to fundamental discoveries in physics. When the Large Hadron Collider smashes particle beams together it results in millions of collisions a second. Sifting through all this data to find interesting phenomena is a monumental task, but now researchers are turning to AI to do it for them.

Similarly, researchers in Germany have been using AI to pore through gravitational wave data for signs of neutron star mergers. This helps scientists detect mergers in time to point a telescope at them.

Perhaps most exciting though, is the promise of AI taking on the role of scientist itself. Combining lab automation technology, robotics, and machine learning, it’s becoming possible to create “self-driving labs.” These take a high-level objective from a researcher, such as achieving a particular yield from a chemical reaction, and then autonomously run experiments until they hit that goal.

Others are going further and actually involving AI in the planning and design of experiments. In 2023, Carnegie Mellon University researchers showed that their AI “Coscientist,” powered by OpenAI’s GPT-4, could autonomously plan and carry out the chemical synthesis of known compounds.

Google has created a multi-agent system powered by its Gemini 2.0 reasoning model that can help scientists generate hypotheses and propose new research projects. And another “AI scientist” developed by Sakana AI wrote a machine learning paper that passed the peer-review process for a workshop at a prestigious AI conference.

Exciting as all this is though, AI’s takeover of science could have potential downsides. Neural networks are black boxes whose internal workings are hard to decipher, which can make results challenging to interpret. And many researchers are not familiar enough with the technology to catch common pitfalls that can distort results.

Nonetheless, the incredible power of these models to crunch through data and model things at scales far beyond human comprehension remains a vital tool. With judicious application AI could massively accelerate progress in a wide range of fields.

The post The Dream of an AI Scientist Is Closer Than Ever appeared first on SingularityHub.

2025-06-24 22:00:00

Scientists are converting immune cells into super-soldiers that can hunt down and destroy cancer cells.

CAR T therapy has been transformative in the battle against deadly cancers. Scientists extract a patient’s own immune cells, genetically engineer them to target a specific type of cancer, and infuse the boosted cells back into the body to hunt down their prey.

Six therapies have been approved by the FDA for multiple types of blood cancer. Hundreds of other clinical trials are in the works to broaden the immunotherapy’s scope. These include trials aimed at recurrent cancers and autoimmune diseases, such as lupus and systemic sclerosis, in which the body’s immune system destroys its own organs.

But making CAR T cells is a long and expensive process. It requires genetic tinkering in the lab, and patients need to have mutated blood cells wiped out with chemotherapy to make room for healthy new ones. While effective, the treatment takes a massive toll on the body and mind.

It would be faster and potentially more effective to make CAR T cells inside the body. Previous studies have tried to shuttle genes that would do just that into immune cells using viruses or fatty bubbles. But these tend to accumulate in the liver rather than target cells. The approach could also result in hyper-aggressive cells that spark life-threatening immune responses.

Inspired by Covid-19 vaccines, a new study tried shuttling a different kind of gene expression into the body. Instead of gene editors, the method turned to mRNA, a biomolecule that translates DNA instructions into cellular functions. The new method is now more targeted—skipping the liver and heading straight to immune cells—and doesn’t change a cell’s DNA blueprint, potentially making it safer than previous approaches. In rodents and monkeys, a few jabs converted T cells to CAR T cells within hours, and these went on to kill cancer cells. The effects “reset” the animals’ immune systems and lasted for roughly a month with few side effects.

“The achievement has implications for treating” certain cancers and autoimmune disorders, and moves “immunotherapy with CAR T cells to wider clinical use,” wrote Vivek Peche and Stephen Gottschalk at St. Jude Children’s Research Hospital, who were not involved in the study.

Our immune system is a double-edged sword. When working in tandem, immune cells fight off bacteria and viruses and nip cancer cells in the bud. But sometimes one immune-cell type, called a B cell, goes rogue.

Normally, B cells produce antibodies to ward off pathogens. But they can also turn into multiple types of aggressive blood cancer and wreak havoc. Cancerous versions of these sneaky cells develop ways to escape the body’s other immune cell types—like T cells, which are constantly on the lookout for unwanted intruders.

Cancer cells aren’t completely invisible. Tumors have unique proteins dotted all over their surfaces, a sort of “fingerprint” that separates them from healthy cells. In classic CAR T therapy, scientists extract T cells from the patient and genetically engineer them to produce protein “hooks”—dubbed chimeric antigen receptors (CAR)—that grab onto those cancer cell proteins. When infused back into the patient, the cells readily hunt down and destroy cancer cells.

CAR T therapy has saved lives. But it has drawbacks. Genetically engineering cells to produce the hook protein could damage their genome and potentially trigger secondary tumors. The manufacturing process also takes time—sometimes too long for the patient to survive.

An alternative is to directly convert a person’s T cells into CAR T cells inside their body with a shot. There have already been successes using DNA-carrying viruses.

The team wondered if they could achieve the same results with mRNA. Unlike DNA, mRNA molecules don’t integrate into the genome, reducing “the risk of damaging DNA in T cells,” wrote Peche and Gottschalk. The idea is similar to how mRNA vaccines for Covid work. These vaccines are loaded with mRNA instructions to fight off the virus. Once inside cells, these mRNA snippets direct the cells to produce proteins that trigger an immune defense. But mRNA can help cells battle other intruders too, like bacteria, or even cancer.

There’s a problem, though. The fatty shuttles used to deliver the mRNA cargo—known as lipid nanoparticles—tend to collect in liver cells, not T cells. In the new study, the team tweaked the shuttles so they would be drawn toward T cells instead. Compared to conventional nanoparticles, these ones rarely stayed inside the liver and more often found their targets.

Each shuttle contained a soup of mRNA molecules encoding a CAR—the “super soldier” protein that helps T cells seek and destroy cancer cells.

When injected into the bloodstreams of mice, rats, and monkeys, the shot converted T cells into CAR Ts in their blood, spleen, and lymph nodes in a few hours, suggesting that the mRNA instructions worked as expected. The therapy went on to destroy cancers in mice with B cell leukemia and lowered B cell levels in monkeys, with effects lasting at least a month.

The shots also seemed to “reset” the body’s immune system. In monkeys, doses of CAR T initially tanked their B cell levels as expected. But these levels eventually rebounded to normal within weeks—with no signs of the new cells turning cancerous.

Compared to clinical studies that used CAR T cells manufactured in labs, these results “should be sufficient to bring about substantial therapeutic benefits,” wrote Peche and Gottschalk.

The study is the latest to engineer CAR T cells inside the body. But there are caveats.

Compared to directly tinkering with T cell DNA, mRNA is theoretically safer as it doesn’t change the cell’s genetic blueprint. But the method requires functional T cells with the metabolic capability to integrate the added molecular instructions—which isn’t always possible in certain types of cancer or other diseases because the cells break down.

However, the system has promise for a myriad of other diseases. Because mRNA doesn’t last long inside the body, it could lower the risk of side effects while still having long-term impact. And because of the B cell “reset,” it’s possible for the immune system to rebuild itself and once again fight off pathogens.

The team is planning a Phase 1 clinical trial to test the therapy. A similar method could also be used to strengthen other immune cell types or ferry other kinds of therapeutic mRNA into the body. It’s “engineering immunotherapy from within,” wrote Peche and Gottschalk.

The post Cancer-Killing Immune Cells Can Now Be Engineered in the Body—With a Vaccine-Like Shot of mRNA appeared first on SingularityHub.

2025-06-23 22:00:00

This framework can help you understand where AI provides value.

If you’ve worried that AI might take your job, deprive you of your livelihood, or maybe even replace your role in society, it probably feels good to see the latest AI tools fail spectacularly. If AI recommends glue as a pizza topping, then you’re safe for another day.

But the fact remains that AI already has definite advantages over even the most skilled humans, and knowing where these advantages arise—and where they don’t—will be key to adapting to the AI-infused workforce.

AI will often not be as effective as a human doing the same job. It won’t always know more or be more accurate. And it definitely won’t always be fairer or more reliable. But it may still be used whenever it has an advantage over humans in one of four dimensions: speed, scale, scope, and sophistication. Understanding these dimensions is the key to understanding AI-human replacement.

First, speed. There are tasks that humans are perfectly good at but are not nearly as fast as AI. One example is restoring or upscaling images: taking pixelated, noisy or blurry images and making a crisper and higher-resolution version. Humans are good at this; given the right digital tools and enough time, they can fill in fine details. But they are too slow to efficiently process large images or videos.

AI models can do the job blazingly fast, a capability with important industrial applications. AI-based software is used to enhance satellite and remote sensing data, to compress video files, to make video games run better with cheaper hardware and less energy, to help robots make the right movements, and to model turbulence to help build better internal combustion engines.

Real-time performance matters in these cases, and the speed of AI is necessary to enable them.

The second dimension of AI’s advantage over humans is scale. AI will increasingly be used in tasks that humans can do well in one place at a time, but that AI can do in millions of places simultaneously. A familiar example is ad targeting and personalization. Human marketers can collect data and predict what types of people will respond to certain advertisements. This capability is important commercially; advertising is a trillion-dollar market globally.

AI models can do this for every single product, TV show, website, and internet user. This is how the modern ad-tech industry works. Real-time bidding markets price the display ads that appear alongside the websites you visit, and advertisers use AI models to decide when they want to pay that price—thousands of times per second.

Next, scope. AI can be advantageous when it does more things than any one person could, even when a human might do better at any one of those tasks. Generative AI systems such as ChatGPT can engage in conversation on any topic, write an essay espousing any position, create poetry in any style and language, write computer code in any programming language, and more. These models may not be superior to skilled humans at any one of these things, but no single human could outperform top-tier generative models across them all.

It’s the combination of these competencies that generates value. Employers often struggle to find people with talents in disciplines such as software development and data science who also have strong prior knowledge of the employer’s domain. Organizations are likely to continue to rely on human specialists to write the best code and the best persuasive text, but they will increasingly be satisfied with AI when they just need a passable version of either.

Finally, sophistication. AIs can consider more factors in their decisions than humans can, and this can endow them with superhuman performance on specialized tasks. Computers have long been used to keep track of a multiplicity of factors that compound and interact in ways more complex than a human could trace. The 1990s chess-playing computer systems such as Deep Blue succeeded by thinking a dozen or more moves ahead.

Modern AI systems use a radically different approach: Deep learning systems built from many-layered neural networks take account of complex interactions—often many billions—among many factors. Neural networks now power the best chess-playing models and most other AI systems.

Chess is not the only domain where eschewing conventional rules and formal logic in favor of highly sophisticated and inscrutable systems has generated progress. The stunning advance of AlphaFold 2, the AI model of structural biology whose creators Demis Hassabis and John Jumper were recognized with the Nobel Prize in chemistry in 2024, is another example.

This breakthrough replaced traditional physics-based systems for predicting how sequences of amino acids would fold into three-dimensional shapes with a 93 million-parameter model, even though it doesn’t account for physical laws. That lack of real-world grounding is not desirable: No one likes the enigmatic nature of these AI systems, and scientists are eager to understand better how they work.

But the sophistication of AI is providing value to scientists, and its use across scientific fields has grown exponentially in recent years.

Those are the four dimensions where AI can excel over humans. Accuracy still matters. You wouldn’t want to use an AI that makes graphics look glitchy or targets ads randomly—yet accuracy isn’t the differentiator. The AI doesn’t need superhuman accuracy. It’s enough for AI to be merely good and fast, or adequate and scalable. Increasing scope often comes with an accuracy penalty, because AI can generalize poorly to truly novel tasks. The 4 S’s are sometimes at odds. With a given amount of computing power, you generally have to trade off scale for sophistication.

Even more interestingly, when an AI takes over a human task, the task can change. Sometimes the AI is just doing things differently. Other times, AI starts doing different things. These changes bring new opportunities and new risks.

For example, high-frequency trading isn’t just computers trading stocks faster; it’s a fundamentally different kind of trading that enables entirely new strategies, tactics, and associated risks. Likewise, AI has developed more sophisticated strategies for the games of chess and Go. And the scale of AI chatbots has changed the nature of propaganda by allowing artificial voices to overwhelm human speech.

It is this “phase shift,” when changes in degree may transform into changes in kind, where AI’s impacts to society are likely to be most keenly felt. All of this points to the places that AI can have a positive impact. When a system has a bottleneck related to speed, scale, scope, or sophistication, or when one of these factors poses a real barrier to being able to accomplish a goal, it makes sense to think about how AI could help.

Equally, when speed, scale, scope, and sophistication are not primary barriers, it makes less sense to use AI. This is why AI auto-suggest features for short communications such as text messages can feel so annoying. They offer little speed advantage and no benefit from sophistication, while sacrificing the sincerity of human communication.

Many deployments of customer service chatbots also fail this test, which may explain their unpopularity. Companies invest in them because of their scalability, and yet the bots often become a barrier to support rather than a speedy or sophisticated problem solver.

Keep this in mind when you encounter a new application for AI or consider AI as a replacement for or an augmentation to a human process. Looking for bottlenecks in speed, scale, scope, and sophistication provides a framework for understanding where AI provides value, and equally where the unique capabilities of the human species give us an enduring advantage.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

The post Will AI Take Your Job? It Depends on These 4 Key Advantages AI Has Over Humans appeared first on SingularityHub.